Voice controlled Knowledge base application with Azure Cognitive Services

Did you know that Azure provides a comprehensive amount of AI services that enable you to create ex. voice-controlled applications? More information about the Azure AI Platform can be found here.

This blog post introduces you briefly to Azure Cognitive Service & Azure Question Answering Service and shows how to create a voice-controlled sample Knowledge base application that listens user's speech (question) and based on that tries to find answers from the knowledge base sources.

Azure AI Platform

Before starting first a few words about Azure AI Platform services which are covered in this blog post.

What are Azure Cognitive Services and especially Speech SDK?

Azure Cognitive Services enable you to build cognitive solutions that can see, hear, speak, understand, and even make decisions.

Azure Cognitive Services are cloud-based artificial intelligence (AI) services that help you build cognitive intelligence into your applications. They are available as REST APIs, client library SDKs, and user interfaces. You can add cognitive features to your applications without having AI or data science skills. Source

The Speech SDK (software development kit) exposes many of the Speech service capabilities, so you can develop speech-enabled applications. The Speech SDK is available in many programming languages and across platforms. The Speech SDK is ideal for both real-time and non-real-time scenarios, by using local devices, files, Azure Blob Storage, and input and output streams. Source

Currently, language support in Speech Service is pretty comprehensive. You can check what languages are supported from here.

What is Azure Question Answering Service?

Microsoft has specified Azure Question Answering Service like this:

Question answering provides cloud-based Natural Language Processing (NLP) that allows you to create a natural conversational layer over your data. It is used to find the most appropriate answer for any input from your custom knowledge base (KB) of information.

Question answering is commonly used to build conversational client applications, which include social media applications, chat bots, and speech-enabled desktop applications. Several new features have been added including enhanced relevance using a deep learning ranker, precise answers, and end-to-end region support. Source

Azure Question Answering Service has replaced the previous QnA Maker service which provided almost identical services. If you're still using QnA Maker you can follow these steps to migrate this new Question Answering Service.

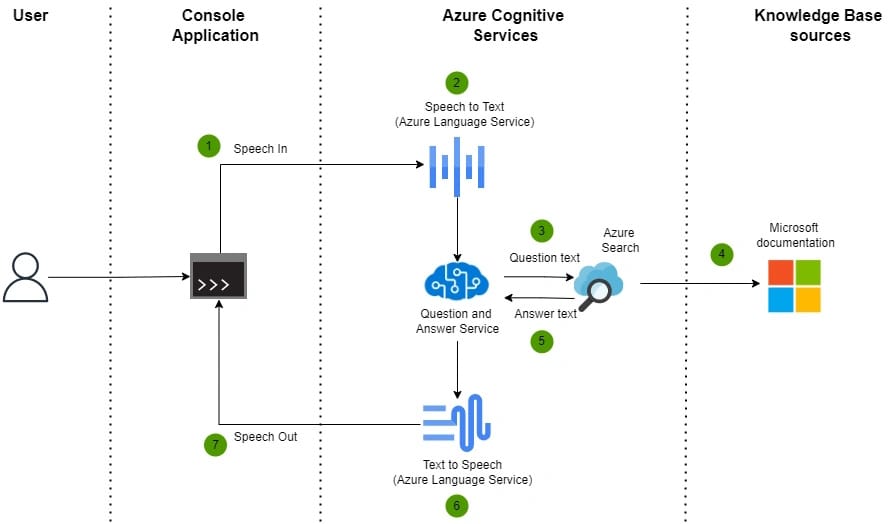

Overview of voice-controlled Knowledge base application

Basically this sample application provides an interface that listens first a question from the user and after that converts speech to text. The next answer is searched from the Knowledge Base by question text. Lastly when the answer is found in the Knowledge Base answer will be read aloud for the user.

Speech-to-text and Text-to-Speech functionalities are handled with Azure Cognitive Services and Knowledge Base functionalities are covered with Azure Question Answering.

Let's start

Configure related Azure AI Platform services

Azure Cognitive Service is the heart of this solution and it provides Speech and Question Answering services.

1. Create Cognitive Service via Azure Portal or CLI

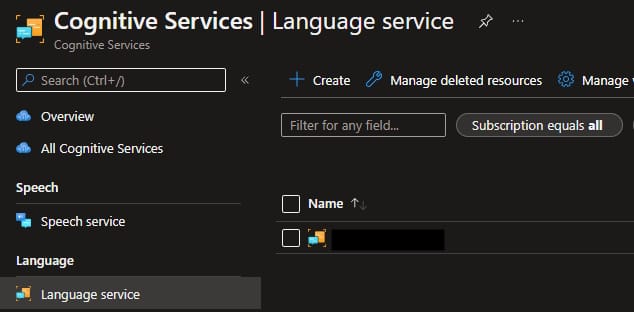

2. Create Language Service

Azure Cognitive Service for Language provides Knowledge Base functionalities (Question and Answering Service) for this sample. You can create a Language Service under Cognitive Service / Language Service

3. Open Language Studio

Language Studio is a set of UI-based tools that lets you explore, build, and integrate features from Azure Cognitive Service for Language into your applications. Language Studio is available at https://language.cognitive.azure.com/

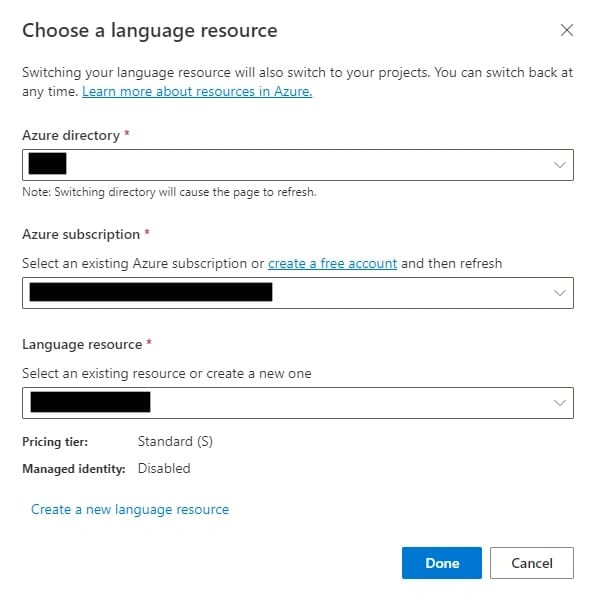

4. Select Language Service in Language Studio

Before creating a new project you need to select just the created Language Service resource. You can find a link to this dialog from the Profile menu (top right corner)

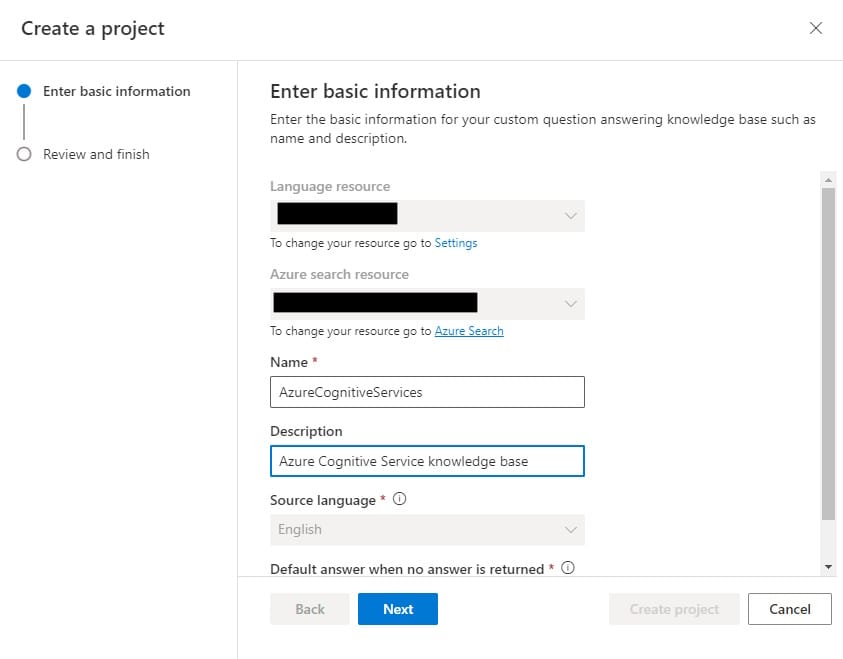

5. Create a new project

From the Language Studion frontpage select Create New and Custom Question answering

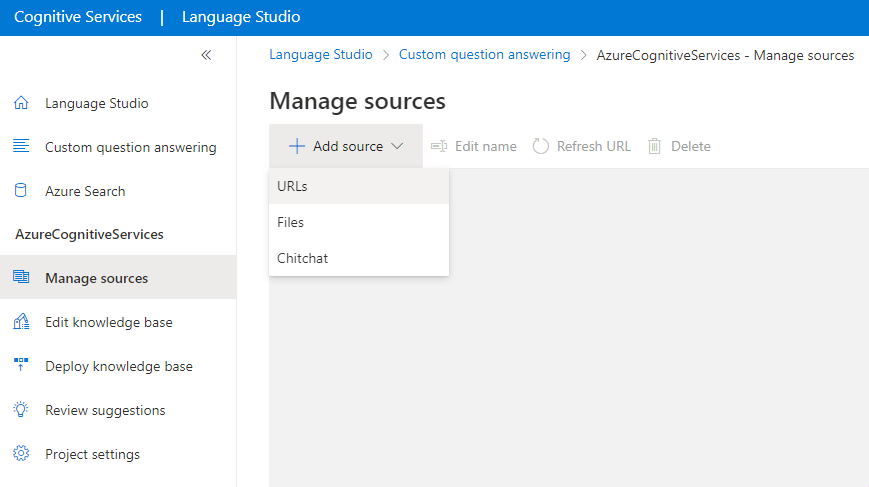

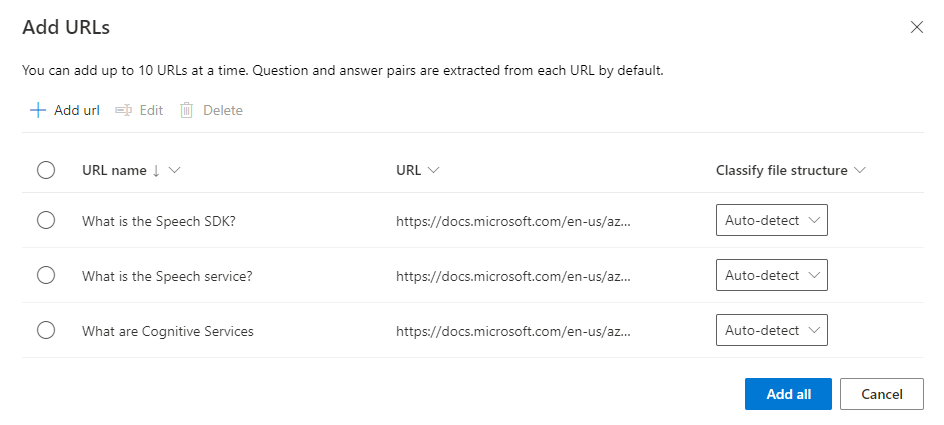

6. Open Manage sources

Under Manage sources data sources can be added to your Knowledge Base. You can add ex. URL resources or Files.

In this sample, I'll add some Microsoft Docs articles.

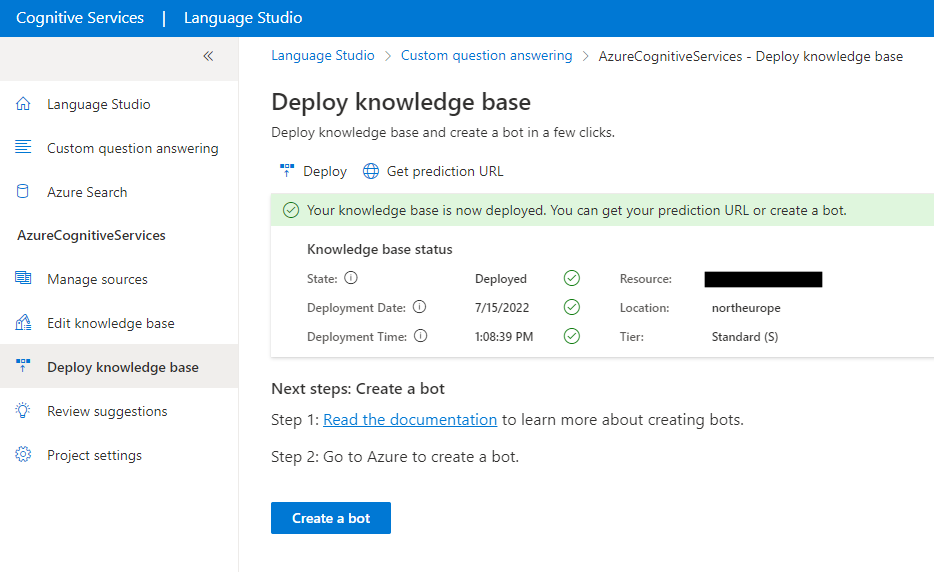

7. Select Deploy a knowledge base

You need to deploy a knowledge base before it's available to use.

Console application

The console application orchestrates the interaction between the user and Azure AI services.

The following NuGet packages are required to enable Speech & Question Answering services from Azure:

Install-Package Microsoft.CognitiveServices.Speech

Install-Package Azure.AI.Language.QuestionAnsweringConfiguration and Azure credentials

Azure Cognitive and Question Answering Service requires the following credentials which are stored in the appsettings.json file of the application.

{

"AzureCognitiveServices": {

"SubscriptionKey": "", // This is a Cognitive Service key which can be found from Keys and Endpoint under Cognitive Service resource

"Region": "northeurope", // This is a region where Cognitive Service resource is located

"VoiceName": "en-US-AriaNeural" // Full list of voices available from https://aka.ms/speech/voices/neural

},

"QuestionAnswering": {

"EndPoint": "https://[YOUR-COGNITIVE-SERVICE].cognitiveservices.azure.com/", // This is a full URL to your Cognitive Service

"Credential": "", // This is a Language Service key which can be found from Keys and Endpoint under Language Service resource

"ProjectName": "AzureCognitiveServices",

"DeploymentName": "production"

}

}Main application

The main application orchestrates communication with Speech SDK and Question Answering Service.

using KnowledgeBase.Console.Services;

using Microsoft.Extensions.Configuration;

var configuration = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json")

.Build();

Console.WriteLine("Hello, KnowledgeBase app is running!");

var speechService = new SpeechService(configuration);

var knowledgeBaseService = new KnowledgeBaseService(configuration);

Console.WriteLine("Ask a question...");

var question = await speechService.ListenSpeechAsync();

Console.WriteLine($"Your question was: {question.InterpretedText}");

var answer = await knowledgeBaseService.FindAnswerAsync(question.InterpretedText);

Console.WriteLine($"Text answer: {question.InterpretedText}");

var result = await speechService.ReadSpeechLoudAsync(answer[0].Text);SpeechService

SpeechService is a wrapper class that encapsulates Speech SDK and provides methods to listen to speech and produce speech.

using KnowledgeBase.Console.Domain;

using KnowledgeBase.Console.Interfaces;

using Microsoft.CognitiveServices.Speech;

using Microsoft.Extensions.Configuration;

namespace KnowledgeBase.Console.Services

{

public class SpeechService : ISpeechService

{

private SpeechSynthesizer _speechSynthesizer;

private SpeechRecognizer _speechRecognizer;

public SpeechService(IConfiguration configuration)

{

var subscriptionKey = configuration["AzureCognitiveServices:SubscriptionKey"] ?? throw new ArgumentNullException("AzureCognitiveServices:SubscriptionKey is missing");

var region = configuration["AzureCognitiveServices:Region"] ?? throw new ArgumentNullException("AzureCognitiveServices:Region is missing");

// Set the voice name, refer to https://aka.ms/speech/voices/neural for full list.

var voiceName = configuration["AzureCognitiveServices:VoiceName"] ?? throw new ArgumentNullException("AzureCognitiveServices:VoiceName is missing");

var config = SpeechConfig.FromSubscription(subscriptionKey, region);

config.SpeechSynthesisVoiceName = voiceName;

_speechSynthesizer = new SpeechSynthesizer(config);

_speechRecognizer = new SpeechRecognizer(config);

}

/// <summary>

/// List speech and return speech as a text

/// </summary>

/// <returns></returns>

public async Task<Text> ListenSpeechAsync()

{

var text = Text.Create(await _speechRecognizer.RecognizeOnceAsync());

_speechRecognizer.Dispose();

return text;

}

/// <summary>

/// Receives plain text and reads it loud

/// </summary>

/// <param name="text"></param>

/// <returns></returns>

public async Task<Speech> ReadSpeechLoudAsync(string text)

{

var speech = Speech.Create(await _speechSynthesizer.SpeakTextAsync(text));

_speechSynthesizer.Dispose();

return speech;

}

}

}

KnowledgeBaseService

KnowsledgeBaseService is responsible for finding answers by text from Question and Answering Azure Service.

using Azure;

using Azure.AI.Language.QuestionAnswering;

using KnowledgeBase.Console.Domain;

using KnowledgeBase.Console.Interfaces;

using Microsoft.Extensions.Configuration;

namespace KnowledgeBase.Console.Services

{

public class KnowledgeBaseService : IKnowledgeBaseService

{

private QuestionAnsweringClient _questionAnsweringClient;

private QuestionAnsweringProject _questionAnsweringProject;

public KnowledgeBaseService(IConfiguration configuration)

{

var endpoint = configuration["QuestionAnswering:EndPoint"] ?? throw new ArgumentNullException("QuestionAnswering:EndPoint is missing");

var credential = configuration["QuestionAnswering:Credential"] ?? throw new ArgumentNullException("QuestionAnswering:Credential is missing");

var projectName = configuration["QuestionAnswering:ProjectName"] ?? throw new ArgumentNullException("QuestionAnswering:ProjectName is missing");

var deploymentName = configuration["QuestionAnswering:DeploymentName"] ?? throw new ArgumentNullException("QuestionAnswering:DeploymentName is missing");

var knowledgeBaseConfig = new KnowledgeBaseConfig()

{

Endpoint = new Uri(endpoint),

Credential = new AzureKeyCredential(credential),

ProjectName = projectName,

DeploymentName = deploymentName

};

_questionAnsweringClient = new QuestionAnsweringClient(knowledgeBaseConfig.Endpoint, knowledgeBaseConfig.Credential);

_questionAnsweringProject = new QuestionAnsweringProject(knowledgeBaseConfig.ProjectName, knowledgeBaseConfig.DeploymentName);

}

/// <summary>

/// Find answer from Knowledge base by plain text

/// </summary>

/// <param name="text"></param>

/// <returns></returns>

public async Task<List<Answer>> FindAnswerAsync(string text)

{

return Answer.Create(await _questionAnsweringClient.GetAnswersAsync(text, _questionAnsweringProject));

}

}

}

Testing

When the console application is running you can ask a question like "What are Azure Cognitive Services?" with your voice and the application finds the answer to this from Microsoft Docs documentation and reads it out loud.

Summary

This was a very funny and insightful small exercise. Overall Speech SDK is very intuitive to use and utilize. Nowadays it's very easy to add voice-controlled functionalities to your applications and the language support is already pretty comprehensive. I covered a very small part of what is possible to do with Azure Cognitive Service so I'll handle this topic also later.

The full source code of this sample application can be found on GitHub.

Comments